Google has unveiled an upgraded version of its experimental medical chatbot, the Articulate Medical Intelligence Explorer (AMIE), showcasing a significant leap in its diagnostic capabilities.

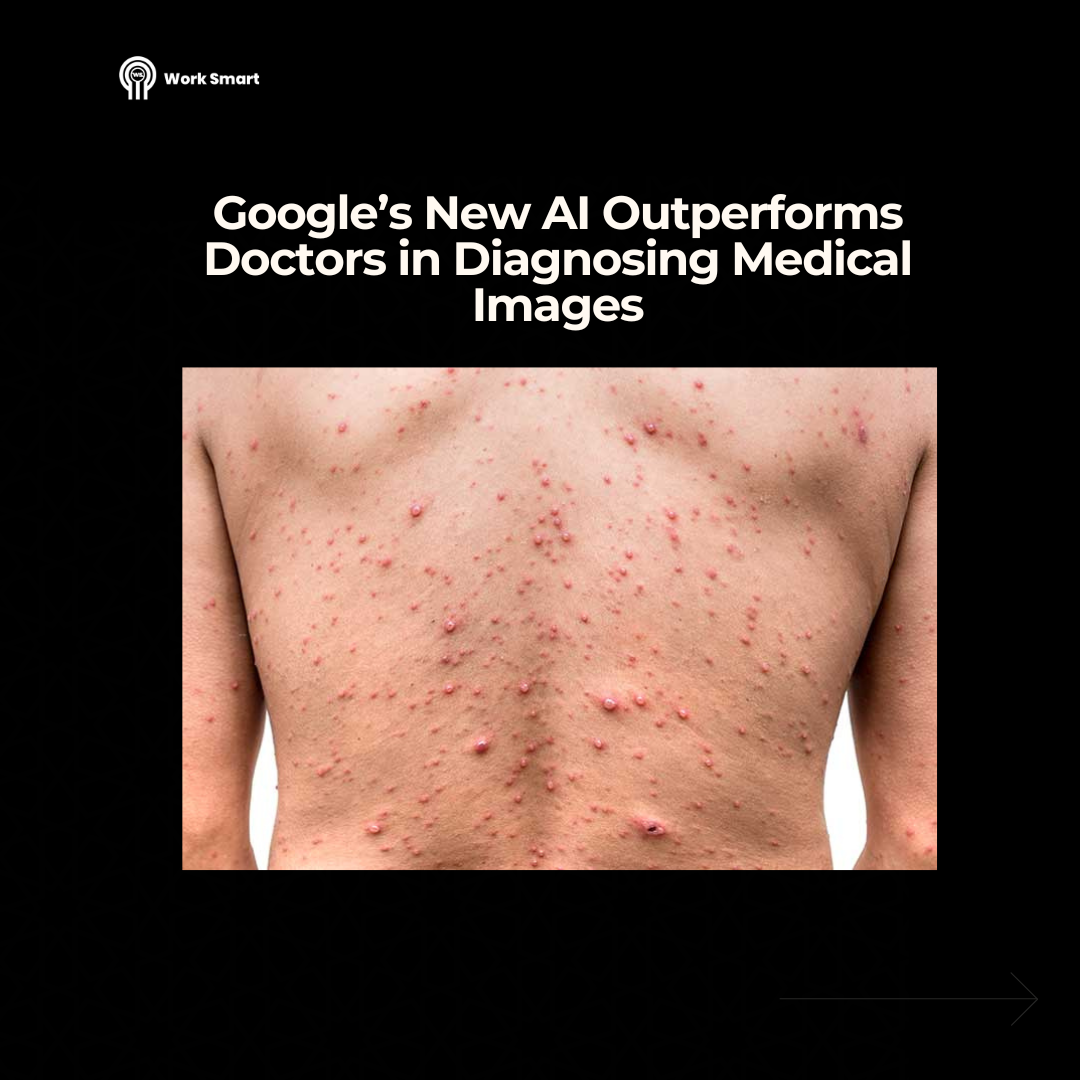

This new iteration can now analyze smartphone photos of rashes and interpret a variety of medical imagery, including electrocardiograms and lab result PDFs, pushing the boundaries of AI in healthcare.

The enhanced AMIE, built upon Google’s advanced Gemini 2.0 Flash large language model, has been specifically adapted for medical reasoning and diagnostic conversations. Researchers have incorporated an algorithm to refine its ability to engage in patient-physician dialogues and arrive at accurate conclusions.

In a simulated study involving actors presenting diverse medical scenarios and relevant images, AMIE demonstrated superior diagnostic accuracy compared to human primary-care physicians.

Notably, its performance remained robust even when presented with lower-quality images, a challenge that can sometimes hinder human interpretation.

Eleni Linos, Director of the Stanford University Center for Digital Health, who was not involved in the research, notes the significance of this development. She believes that systems integrating images and clinical information “brings us closer to an AI assistant that mirrors how a clinician actually thinks.”

Ryutaro Tanno, a scientist at Google DeepMind and co-author of the study, highlights the meticulous process of refining the AI’s conversational abilities.

By simulating patient-physician dialogues and incorporating an evaluation mechanism, they aimed to “imbue it with the right, desirable behaviours when conducting a diagnostic conversation.”

While still in the experimental phase and awaiting peer review, this upgraded AI tool suggests a promising future where AI can serve as a powerful assistant to clinicians, potentially leading to more accurate and efficient diagnoses across a wider range of medical conditions.

Click to check our services.

Leave a Reply